Why AI/ML Matters Now in Healthcare

The healthcare industry is standing at a crossroads. Rising operating costs, a shortage of skilled clinicians, and growing demands for personalized care are straining traditional systems. At the same time, regulators are tightening oversight, and patients are expecting digital-first experiences similar to what they get in banking or retail.

Artificial Intelligence (AI) and Machine Learning (ML) are emerging as practical answers to these challenges. They are being applied to reduce administrative burden, predict patient risk, and support clinical decisions in ways that traditional systems cannot deliver.

The opportunity is significant. Accenture projects that AI in healthcare could unlock 150 billion dollars in annual savings by 2026. Yet progress will depend on how leaders choose to move from pilots to scaled deployments that deliver measurable outcomes.

This guide explores where AI and ML are already driving results, the barriers executives must plan for, and the strategies that distinguish successful initiatives from those that stall.

1. The State of AI/ML in Healthcare (2025 Snapshot)

AI and ML adoption in healthcare is no longer limited to experiments, but progress is uneven. Larger health systems and payers are embedding models into daily operations, while smaller organizations remain stuck in pilot projects. The difference lies in whether leadership treats AI as a strategic priority or as an isolated IT tool.

In clinical practice, radiology and pathology are the furthest ahead. FDA-cleared algorithms are assisting with image interpretation, helping clinicians detect conditions such as strokes or lung nodules more quickly. In emergency settings, these tools are already influencing triage decisions.

On the financial side, payers have advanced faster than providers. AI models are predicting denials and detecting fraudulent claims at scale, shortening payment cycles and improving member satisfaction.

What defines the current state is not whether sophisticated models exist — they do — but whether organizations can operationalize them across workflows without adding new complexity. That shift from pilots to scale is what separates leaders from laggards in 2025.

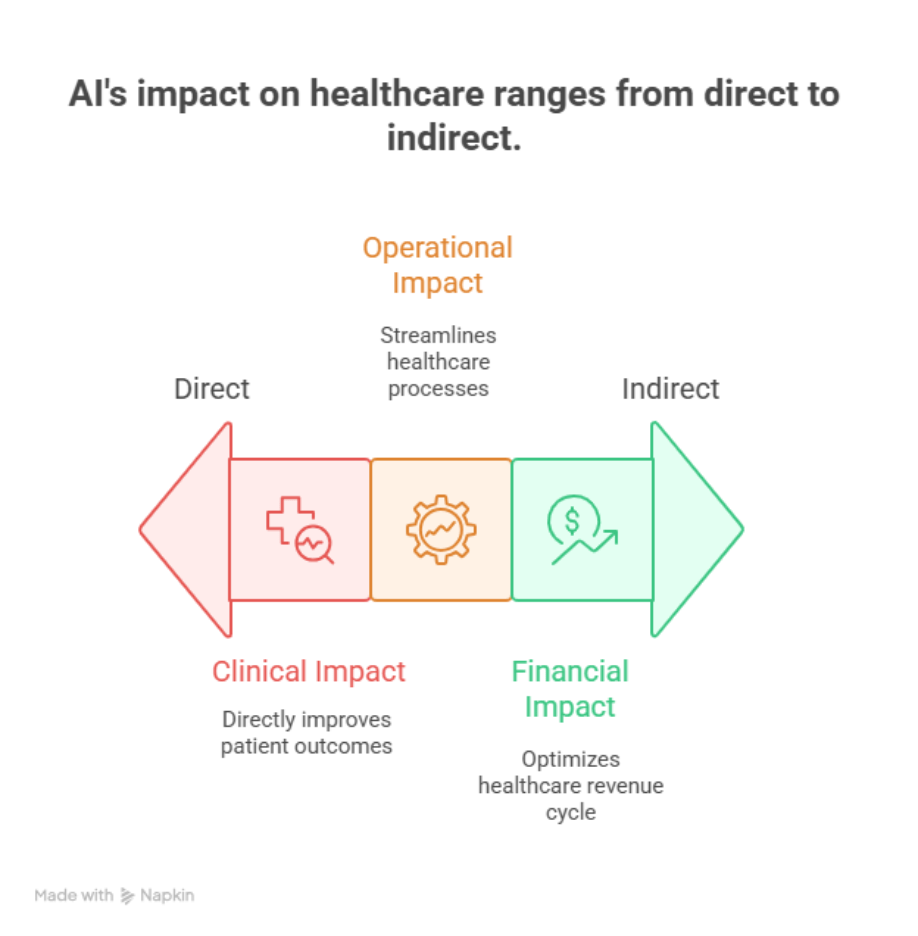

2. Clinical Impact of AI/ML

Radiology, pathology, and ophthalmology remain the most visible use cases. AI systems that once played the role of a “second opinion” are now being used to prioritize scans and escalate critical cases in crowded departments. This is changing how clinicians allocate time, not just how they interpret results.

Predictive analytics is moving beyond retrospective reporting. Hospitals are deploying models that combine patient history, lab values, and social data to flag risks such as sepsis or readmissions before they occur. The focus has shifted to real-time interventions that alter the course of care.

Personalized medicine is also gaining traction. By combining genomic data with continuous streams from wearables, clinicians are tailoring treatments for oncology and chronic conditions. In practice, this can mean adjusting a drug regimen based on how a single patient metabolizes it rather than relying on broad averages.

Across these domains, AI’s value is no longer measured by theoretical accuracy but by whether it shortens decision time, prevents complications, and frees clinicians to focus on care instead of repetitive tasks.

3. Operational and Financial Impact of AI/ML

If clinical care is where AI draws the headlines, operations and finance are where it proves its worth. For many hospitals and payers, this is the first area where AI has delivered measurable returns.

Revenue cycle management has been one of the fastest adopters. Machine learning models are already scanning claims before submission to flag patterns that historically led to denials. Instead of staff chasing rejections after the fact, errors are caught up front, reducing rework and speeding up payment cycles. Some payers are also using anomaly detection to spot fraudulent billing behavior in near real time, shrinking the window between fraud and recovery.

Administrative tasks are another area where AI is showing traction. Clinical documentation assistants who transcribe and structure conversations between physicians and patients are gradually moving from pilots to everyday use. While not perfect, they cut down the time doctors spend typing into EHRs, which has been a major source of burnout. In parallel, AI-driven scheduling systems are balancing provider calendars with patient demand, reducing no-shows and smoothing bottlenecks across clinics.

Workforce management is emerging as a quieter but critical use case. Hospitals are testing AI tools that match staffing levels with predicted patient volume, accounting for seasonal surges or local events. When combined with predictive analytics, these systems help avoid both understaffing in emergencies and costly overstaffing.

4. Technology Foundations for AI/ML Success

Every successful AI program in healthcare rests on infrastructure decisions made long before the first model is deployed. The organizations that struggle are often those that underestimated this foundation.

Data quality and accessibility remain the primary barriers. Clinical data sits fragmented across EHRs, lab systems, and imaging archives, often in incompatible formats. Without consistent pipelines to clean, normalize, and tag this data, even the most sophisticated model produces unreliable results. Health systems are investing heavily in interoperability frameworks such as FHIR to unlock the ability to train and deploy AI at scale.

Cloud infrastructure is also becoming unavoidable. On-premise servers cannot keep up with the compute requirements of training large models or running them in real time. Many organizations are adopting hybrid approaches, where sensitive patient data stays local but AI models are trained and updated in the cloud. This balance allows scalability without compromising compliance.

Integration into legacy workflows is the final piece. An accurate model that sits outside the clinician’s daily tools adds little value. APIs and middleware are being used to embed AI recommendations directly into existing EHR screens or claims systems. The aim is for the AI to surface insight where work is already happening, not in a separate dashboard that creates another layer of friction.

The lesson for executives is clear: AI initiatives fail less often because of weak algorithms and more often because the surrounding infrastructure was never designed to support them. Investing in clean data pipelines and seamless integration is what separates pilots from production.

5. Risks, Compliance, and Responsible AI

AI in healthcare carries as much risk as opportunity. The challenge for leaders is how these systems affect trust, compliance, and patient safety.

Bias in Clinical Models

AI models are only as reliable as the data they learn from. If training sets underrepresent women or minority groups, predictions may underperform for those patients. This has already been observed in cardiology and dermatology tools, where misdiagnosis rates varied by demographic group. Leaders must insist on diverse datasets and ongoing bias audits.

Overreliance on Automation

AI is meant to support, not replace, human judgment. Studies of clinical decision support tools show that clinicians sometimes accept algorithmic outputs without enough scrutiny, leading to errors when the model is wrong. Clear guidance on how AI should be used within workflows is critical.

Data Integrity and Liability

Incorrect or incomplete data feeding into AI can result in flawed clinical advice or billing errors. That risk does not stay with the software vendor alone. Hospitals and payers could face liability under false claims laws or patient safety regulations if AI-driven recommendations go unchecked. Documentation of model versions, audit logs, and escalation processes should be part of every deployment.

Security Vulnerabilities

AI increases the attack surface. Models connected to medical devices or cloud systems can be manipulated with adversarial data or exploited through weak integration points. Conventional IT security assessments often miss these risks. Regular penetration testing and red-team exercises should extend to AI-enabled systems.

Regulatory Uncertainty

Compliance rules are struggling to keep pace. HIPAA, FDA regulations, and emerging state AI laws offer partial coverage, but none address the full lifecycle of machine learning models. Executives should expect audits that demand model inventories, explainability reports, and continuous monitoring as regulators adapt.

6. Measuring ROI of AI/ML in Healthcare

Return on AI depends on what you choose to measure and how quickly you can attribute improvements to the model rather than to unrelated workflow changes. Treat this like any major service line: define the value levers, instrument them, and review them on a fixed cadence.

What to measure

- Cycle times. Claim submission to payment, prior authorization turnaround, report turnaround in imaging, and average time in notes. These show whether AI removes wait states.

- Quality and accuracy. Coding accuracy, documentation completeness, radiology discrepancy rates, and denial prevention rate. If quality falls while speed rises, the gain is fragile.

- Throughput and capacity. Patients seen per session, reads per radiologist, claims processed per FTE. Capacity tells you if the same staff can serve more demand.

- Cost to serve. Unit costs such as cost per claim or cost per documented encounter. Include model inference costs and rework.

- Safety and compliance signals. Adverse events caught, audit findings, and explainability reviews completed. These protect ROI from being clawed back by risk.

How to prove attribution

- A/B or phased rollout. Start with one service line or site and keep a matched control. Move from pilot to additional units only after you see persistent improvement for two or more review cycles.

- Pre-agreed baselines. Lock KPIs for 8–12 weeks before go-live. If documentation time drops or denials fall, you will have a clean before–after.

- Shadow metrics. Track “hidden” costs such as exception handling, manual reviews, and model review time. These can erase reported gains if ignored.

Time-to-value guidance

- Operational automations such as claim scrubbing, document extraction, and scheduling typically show returns in weeks, not quarters. The American Hospital Association outlines how pre-submission claim checks and denial prediction reduce rework and speed payment.

- Ambient documentation can pay back when it consistently cuts time in notes and EHR clicks. Recent reports from The Permanente Medical Group and peer-reviewed studies describe large-scale use with material time savings for clinicians.

- Enterprise analytics and clinical models often need longer because they depend on data quality, integration, and change management. Plan for staged milestones rather than a single ROI event.

Signals your business case is real

- A repeatable reduction in turnaround or denial rate that persists after the first month.

- Fewer handoffs and fewer exceptions per thousand encounters or claims.

- Workload shifts from low-value triage to higher-value review and decision work.

A recent example from revenue cycle automation shows what this looks like at scale: Omega Healthcare reports saving over 15,000 employee hours per month and cutting turnaround time by half after adopting AI document processing, with clients realizing measurable ROI. The point is not the headline number. It is that the savings were tied to a specific process, measured continuously, and achieved with sustained accuracy.

6. Common pitfalls that destroy ROI

- Measuring only pilot speed-ups. Gains vanish when the model meets real volumes and messy data. Use volumes that match peak weeks.

- Ignoring integration friction. A model that lives outside the EHR or claims system will not be used. Embed insights where work already happens.

- Counting savings twice. If an automation replaces overtime, remove the overtime budget from the baseline. If it replaces vendor tasks, adjust the contract.

- Treating model drift as an afterthought. Schedule periodic revalidation. ROI erodes when models are not refreshed.

Executive checklist

- One owner per KPI, one source of truth dashboard, one cadence for review.

- A contract that ties vendor payment to agreed outcomes where appropriate.

- A path to stop, fix, or decommission models that do not meet thresholds.

The organizations that report durable ROI are the ones that measure what matters, instrument the process end-to-end, and treat AI like a living service rather than a one-time project.

7. Where AI/ML in Healthcare Is Heading

Generative AI moves from pilots to embedded tools

Major platform vendors are shipping ambient documentation and clinical copilots that sit inside daily workflows. Microsoft’s Nuance team introduced Dragon Copilot for note-taking, evidence summaries, and referral letters, which signals a shift from stand-alone apps to embedded assistants in clinical systems.

Agentic systems begin to take on closed-loop tasks

Research is moving from “AI as a tool” to “AI as an agent” that can perceive context, plan multi-step actions, and call external tools. In medicine, this starts with bounded tasks such as triage support or protocol checks, with human oversight. Expect early deployments in low-risk, high-volume workflows.

Interoperability unlocks larger training and inference datasets

Nationwide exchange through TEFCA is scaling, with thousands of organizations now live and tens of millions of documents exchanged since go-live. This makes cross-network retrieval and normalization more practical, which in turn strengthens both predictive models and real-time decision support.

Regulation and assurance frameworks get sharper

ONC’s HTI-1 rule adds transparency and documentation requirements for decision support interventions, which affects how AI explanations and evidence are exposed in certified health IT. The FDA continues to evolve guidance for AI/ML-based SaMD, and the NIST AI Risk Management Framework is becoming the reference for organizational guardrails. Leaders should expect audits to look for model inventories, change logs, and monitoring plans.

Administrative AI accelerates under policy pressure

CMS finalized prior-authorization and interoperability API requirements that push payers and providers to automate data exchange. This raises the ceiling for AI that pre-checks documentation, predicts denials, and shortens approval cycles.

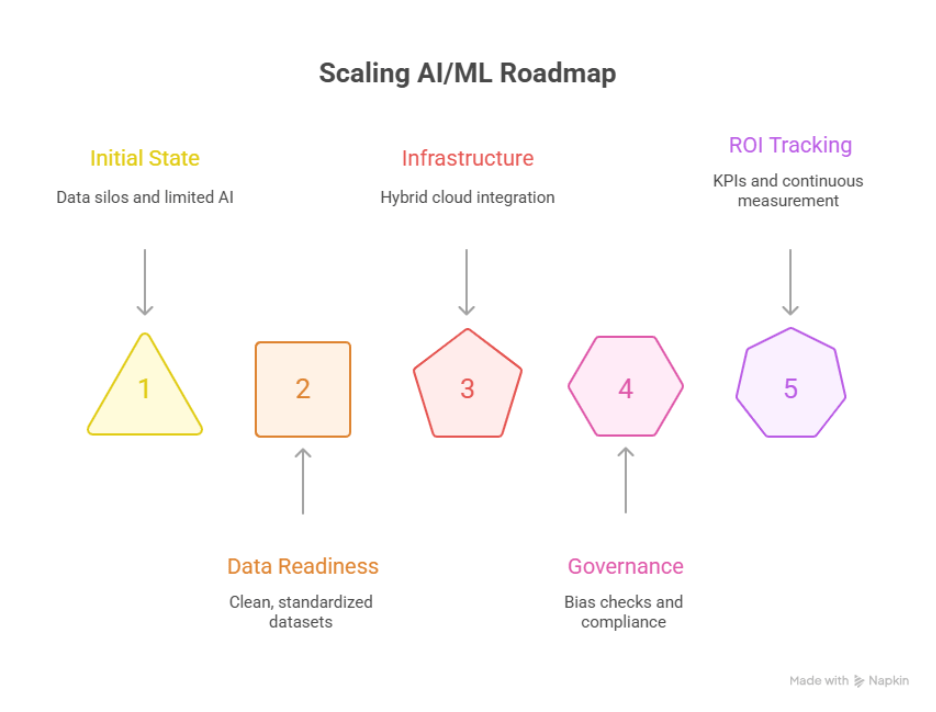

8. Executive Guidance: Building a Scalable AI/ML Roadmap

Start where risk is low and the signal is clear

Target revenue cycle checks, documentation assistance, or scheduling first. These areas have measurable cycle-time gains and fewer clinical risk factors. Move clinical decision support later, once governance and monitoring are in place. This sequencing aligns with how federal health programs describe the capabilities needed to scale AI responsibly.

Design for audit from day one

Keep a register of models, training data sources, intended use, and known limitations. Map how explanations and evidence will surface in the EHR to satisfy HTI-1 decision support transparency. Link each deployment to an owner, a review cadence, and a rollback plan.

Adopt a two-zone architecture

Use a protected data zone for PHI with strong lineage and access controls, and a scalable compute zone for training and inference. Hybrid patterns are common, with sensitive data retained on-premises and heavy training workloads in the cloud. This reduces latency to clinical systems while meeting security and compliance needs reflected in FDA and NIST guidance.

Make interoperability a prerequisite, not an afterthought

Budget for data engineering that normalizes EHR, claims, imaging, and device data. Align with FHIR resources where possible and connect to TEFCA networks as they expand. Better data plumbing will matter more to outcomes than model choice.

Plan for partner dynamics in the platform era

EHR vendors are releasing overlapping AI features. Partnerships can accelerate adoption, but they can also shift into competition, as seen in the ambient documentation space. Keep an exit path and avoid single-vendor lock-in for core AI capabilities.

Tie the roadmap to policy tailwinds

Where CMS and ONC are pushing APIs and prior-auth automation, budget for AI that uses those rails. Where HTI-1 or FDA rules affect explainability and labeling, bake those requirements into acceptance criteria. This keeps projects compliant and shortens approval cycles.

Close with a simple operating rhythm

- One portfolio of AI use cases with risk tiers and owners

- One instrumentation plan that tracks cycle times, quality, safety, and cost to serve

- One governance cadence that reviews drift, incidents, and decommission decisions

Anchor the program on these basics, then scale into higher-stakes clinical workflows once the foundation is steady.

Conclusion: Moving from Pilots to Scaled Impact

AI and ML in healthcare are no longer future bets. They are already reshaping how care is delivered, how revenue cycles are managed, and how health systems plan for the next decade. The challenge for leaders is not whether the technology works, but whether it can be scaled responsibly across the organization without adding new risks.

Executives who succeed will be those who treat AI as a service line, not a side project — with clear ROI metrics, strong governance, and readiness for evolving regulation. The organizations that combine operational efficiency with clinical trust will set the pace for the industry.

If your roadmap includes AI, the next step is to align with partners who understand both the technical and regulatory demands of healthcare. Explore our Healthcare software development expertise, or connect with us directly at info@nalashaa.com to start a conversation.

Latest posts by Priti Prabha (see all)

- Population Health Analytics for Healthcare Payers - January 15, 2026